Turning popular links from your Mastodon timeline into an RSS feed

Ever since a certain someone bought Twitter, I’ve been quite invested into using the Fediverse as my main social web efforts. My Mastodon timeline does a good job for short-form text updates. And Pixelfed is a great nascent Instagram-replacement.

One thing I particularly enjoy about both of them is the fact that they bring back the simple, chronological timeline. Having an actual timeline (to me) makes it a lot easier to orient oneself and understand the temporal context of posts. For example, it’s easy to jump back and forth between time, instead of having disconnected posts.

Of course, a strictly chronological timeline comes with one drawback: It’s extremely hard to catch up with everything that happened, as there’s no “What you missed” feature. Which already bugged me back in the early days of my own Twitter use in ~2011, when it in turn was purely based on chronological order. In those days, I had already made a bad attempt of aggregating the most popular links posted to Twitter, in order to be able to see what external things people found worthy to share. Between API changes and life, I abandoned that at some point.

I thought it would be nice to resurrect that idea for my own Mastodon use though: What if I could follow up with the links that were most often posted into my own Mastodon timeline, by having them aggregated into an RSS feed? And then I stumbled over Adam Hill’s fediview, which is similar in that it aggregates the most popular posts instead, and digests them to an email.

Inspired by that, I made a small, Django-based web-app that implements the approach for aggregating links to an RSS-feed. It allows logging in through the Mastodon OAuth flow, which then provides access to ones timeline. In the background, a small cron-job regularly fetches the current timeline to get the current posts, and saves all links observed. Once a day, another cron-job then aggregates the top X links into a regular RSS feed, that one can subscribe to with ones RSS reader of choice. Another set of background tasks regularly deletes older posts, as the idea isn’t to create a permanent archive but a transient collection for catching up in the moment.

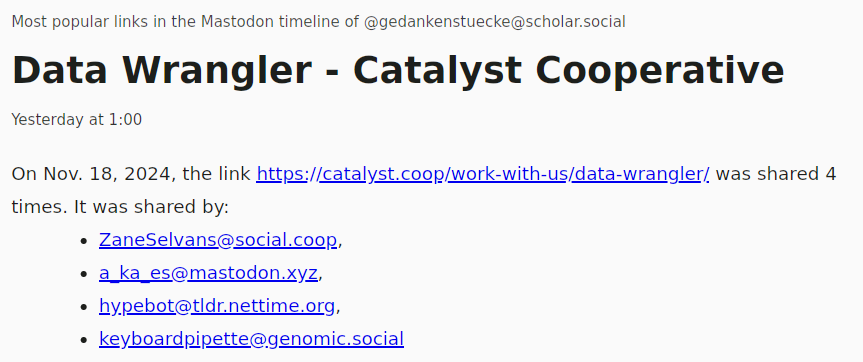

How a single link is displayed in the RSS feed

How a single link is displayed in the RSS feed

So far, there’s only very few settings, i.e. the number of items the feed should have at most per day, and whether your feed should include backlinks to the posts in which the links were shared. The “algorithm” for the aggregation is also quite simple: For all the links seen during the last calendar day, sum up how often each of them was seen and rank them based on that. Then put the top X of them into an RSS feed. Duplicate links (i.e. the same user posting the same link >1 time) are not counted multiple times. This avoids the “problem” of some folks e.g. boosting or re-sharing links to e.g. their own blog posts.

Lastly, I also decided to keep the whole setup as light-weight as my limited skills allowed it to be, mainly as I wanted to be able to easily deploy it to my uberspace. That’s why the focus on cron-jobs, instead of more advanced task management and queues. And it turns out it works really well, a small instance of it for trying it out can be found here. Of course you can also run your own, hopefully the documentation I wrote is complete enough for that. Bug reports, feature requests and contributions are also welcome on Codeberg.